Sound recording and reproduction is an electrical or mechanical inscription and re-creation of sound waves, such as spoken voice, singing, instrumental music, or sound effects. The two main classes of sound recording technology are analog recording and digital recording.

Acoustic analog recording is achieved by a small microphone diaphragm that can detect changes in atmospheric pressure such as acoustic sound waves and record them as a graphic representation of the sound waves on a medium such as a phonograph in which a stylus senses grooves on a record.

In magnetic tape recording, the sound waves vibrate the microphone diaphragm and are converted into a varying electric current, which is then converted to a varying magnetic field by an electromagnet, which makes a representation of the sound as magnetized areas on a plastic tape with a magnetic coating on it.

Analog sound recording is the reverse process, with a bigger loudspeaker diaphragm causing changes to atmospheric pressure to form acoustic sound waves. Electronically generated sound waves may also be recorded directly from devices such as an electric guitar pick up or a synthesizer, without the use of acoustics in the recording process other than the need for musicians to hear how well they are playing during recording sessions.

Digital recording and reproduction converts the analog sound signal picked up by the microphone to a digital form by a process of digitalisation, allowing it to be stored and transmitted by a wider variety of media. Digital recording stores audio as a series of binary numbers representing samples of the amplitude of the audio signal at equal time intervals, at a sample rate high enough to convey all sounds capable of being heard. Digital recordings are considered higher quality than analog recordings, not necessarily because they have a wider frequency response or dynamic range, but because the digital format can prevent much loss of quality found in analog recording due to noise and electromagnetic interference in playback, and mechanical deterioration or damage to the storage medium. A digital audio signal must be reconverted to analog form during playback before it is applied to a loudspeaker or earphones.

The first device that could record actual sounds as they passed through the air, but could not play them back. The purpose was only visual study and it was called the phonautograph,. The earliest known recordings of the human voice are phonautograph recordings, called “phonautograms”, made in 1857. They consist of sheets of paper with sound-wave-modulated white lines created by a vibrating stylus that cut through a coating of soot as the paper was passed under it.

The earlier, purely acoustic methods of recording had limited sensitivity and frequency range. Mid-frequency range notes could be recorded but very low and very high frequencies could not. Instruments such as the violin transferred poorly to disc; however this was partially solved by retrofitting a conical horn to the sound box of the violin. The horn was no longer required once electrical recording was developed.

Between the invention of the phonograph in 1877 and the advent of digital media, arguably the most important milestone in the history of sound recording was the introduction of what was then called “electrical recording”, in which a microphone was used to convert the sound into an electrical signal that was amplified and used to actuate the recording stylus. This innovation eliminated the “horn sound” resonances characteristic of the acoustical process, produced clearer and more full-bodied recordings by greatly extending the useful range of audio frequencies, and allowed previously unrecordable distant and feeble sounds to be captured.

Magnetic tape

Other important inventions of this period were magnetic tape and the tape recorder. Paper-based tape was first used but was soon replaced by polyester and acetate backing due to dust drop and hiss. Acetate was more brittle than polyester and snapped easily. This technology, the basis for almost all commercial recording from the 1950s to the 1980s, was invented by German audio engineers in the 1930s, who also discovered the technique of AC biasing, which dramatically improved the frequency response of tape recordings.

Magnetic tape allowed the radio industry for the first time to pre-record many sections of program content such as advertising, which formerly had to be presented live, and it also enabled the creation and duplication of complex, high-fidelity, long-duration recordings of entire programs. Also, for the first time, broadcasters, regulators and other interested parties were able to undertake comprehensive logging of radio broadcasts. Innovations like multitracking and tape echo enabled radio programs and advertisements to be pre-produced to a level of complexity and sophistication that was previously unattainable and the combined impact of these new techniques led to significant changes to the pacing and production style of program content, thanks to the innovations like the endless-loop broadcast cartridge.

Stereo and hi-fi

In 1931 Alan Blumlein, a British electronics engineer working for EMI, designed a way to make the sound of an actor in a film follow his movement across the screen. In December 1931 he submitted a patent including the idea, and in 1933 this became UK patent number 394,325. Over the next two years, Blumlein developed stereo microphones and a stereo disc-cutting head, and recorded a number of short films with stereo soundtracks.

Magnetic tape enabled the development of the first practical commercial sound systems that could record and reproduce high-fidelity stereophonic sound. The experiments with stereo during the 1930s and 1940s were hampered by problems with synchronization. A major breakthrough in practical stereo sound was made by Bell Laboratories, who in 1937 demonstrated a practical system of two-channel stereo, using dual optical sound tracks on film. The first company to release commercial stereophonic tapes is EMI (UK). Their first “Stereosonic” tape was issued in 1954. The rest followed quickly both under the His Master’s Voice and Columbia labels. 161 Stereosonic tapes were released, most of which being classical music or lyric recordings. These tapes were also imported in the USA by RCA.

Most pop singles were mixed into monophonic sound until the mid-1960s, and it was common for major pop releases to be issued in both mono and stereo until the early 1970s. Many Sixties pop albums now available only in stereo were originally intended to be released only in mono, and the so-called “stereo” version of these albums were created by simply separating the two tracks of the master tape. In the mid Sixties, as stereo became more popular, many mono recordings were remastered using the so-called “fake stereo” method, which spread the sound across the stereo field by directing higher-frequency sound into one channel and lower-frequency sounds into the other.

Digital recording

Graphical representation of a sound wave in analog (red) and 4-bit digital (black).

The advent of digital sound recording and later the compact disc in 1982 brought significant improvements in the durability of consumer recordings. The CD initiated another massive wave of change in the consumer music industry, with vinyl records effectively relegated to a small niche market by the mid-1990s. The introduction of digital systems was initially fiercely resisted by the record industry which feared wholesale piracy on a medium which was able to produce perfect copies of original released recordings.

A digital sound recorder from Sony

The most recent and revolutionary developments have been in digital recording, with the development of various uncompressed and compressed digital audio file formats, processors, capable and fast enough to convert the digital data to sound in real time, and inexpensive mass storage. This generated a new type of portable digital audio player. As technologies which increase the amount of data that can be stored on a single medium, such as super Audio CD, DVD-A, Blu-ray Disc and HD DVD become available, longer programs of higher quality fit onto a single disc. Sound files are readily downloaded from the Internet and other sources, and copied onto computers and digital audio players. Digital audio technology is used in all areas of audio, from casual use of music files of moderate quality to the most demanding professional applications. New applications such as internet radio and podcasting have appeared.

Technological developments in recording and editing have transformed the record, movie and television industries in recent decades. Audio editing became practicable with the invention of magnetic tape recording, but digital audio and cheap mass storage allows computers to edit audio files quickly, easily, and cheaply. Today, the process of making a recording is separated into tracking, mixing and mastering. Multitrack recording makes it possible to capture signals from several microphones, or from different ‘takes’ to tape or disc, with maximized headroom and quality, allowing previously unavailable flexibility in the mixing and mastering stages for editing, level balancing, compressing and limiting, adding effects such as reverb, equaliser and flanger, to name a few. There are many different digital audio recording and processing programs running under computer operating systems for all purposes, from professional through serious amateur to casual user.

Digital audio workstation (DAW)

This is an electronic system designed solely or primarily for recording, editing and playing back digital audio. DAWs were originally tape-less, microcompressor-based systems. Modern DAWs are software running on computers with audio interface hardware.

Integrated DAW

An integrated DAW consists of a mixing console, control surface, audio converter, and data storage in one device. Integrated DAWs were more popular before personal computers became powerful enough to run DAW software. As computer power increased and price decreased, the popularity of the costly integrated systems with console automation dropped. Today, some systems still offer computer-less arranging and recording features with a full graphical user interface (GUI).

Software DAW

A computer-based DAW has four basic components: a computer, a sound card, also called a sound converter or audio interface, a digital audio editor software, and at least one input device for adding or modifying musical note data. This could be as simple as a mouse, and as sophisticated as a MIDI controller keyboard, or an automated fader board for mixing track volumes. The computer acts as a host for the sound card and software and provides processing power for audio editing. The sound card or external audio interface typically converts analog audio signals into digital form, and for playback converting digital to analog audio; it may also assist in further processing the audio. The software controls all related hardware components and provides a user interface to allow for recording, editing, and playback. Most computer-based DAWs have extensive MIDI recording, editing, and playback capabilities, and some even have minor video-related features.

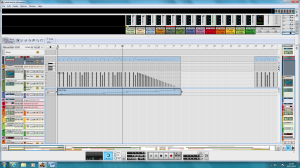

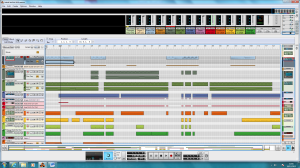

As software systems, DAWs could be designed with any user interface, but generally they are based on a multitrack tape recorder metaphor, making it easier for recording engineers and musicians already familiar with using tape recorders to become familiar with the new systems. Therefore, computer-based DAWs tend to have a standard layout which includes transport controls play, rewind, record and more, track controls and/or a mixer, and a waveform display. In single-track DAWs, only one mono or stereo form sound is displayed at a time.

Multitrack DAWs support operations on multiple tracks at once. Like a mixing console, each track typically has controls that allow the user to adjust the overall volume and stereo balance, pan, of the sound on each track. In a traditional recording studio additional processing is physically plugged into the audio signal path. However, a DAW can also route in software or use software plugins or VSTs to process the sound on a track.

Perhaps the most significant feature available from a DAW that is not available in analogue recording is the ability to ‘undo’ a previous action. Undo makes it much easier to avoid accidentally permanently erasing or recording over a previous recording. If a mistake is made, the undo command is used to conveniently revert the changed data to a previous state. Cut, Copy, Paste, and Undo are familiar and common computer commands and usually available in DAWs in some form. More common functions include the modifications of several factors concerning a sound. These include wave shape, pitch, tempo, and filtering.

Commonly DAWs feature some form of automation, often performed through “envelopes”. Envelopes are procedural line segment-based or curve-based interactive graphs. The lines and curves of the automation graph are joined by or comprise adjustable points. By creating and adjusting multiple points along a waveform or control events, the user can specify parameters of the output over time. MIDI recording, editing, and playback is increasingly incorporated into modern DAWs of all types, as is synchronization with other audio and/or video tools.

There are countless plugins for modern day DAW software, each one coming with its own unique functionality. Thus expanding the overall variety of sounds and manipulations that are possible. Some of the functions of these plugins include distortion, resonators, equalizers, synthesizers, compressors, chorus, virtual amp, limiter, phaser, and flangers. Each have a their own form of manipulating the soundwaves, tone, pitch, and speed of a simple sound and transform it into something different. To achieve an even more distinctive sound, multiple plugins can be used in layers, and further automated to manipulate the original sounds and mold it into a completely new sample.

Sound Cards

A sound card, also known as an audio card is an internal computer expansion card that facilitates the input and output of audio signals to and from a computer under control of computer programs. The term sound card is also applied to external audio interfaces that use software to generate sound, as opposed to using hardware inside the PC. Typical uses of sound cards include providing the audio component for multimedia applications such as music composition, editing video or audio, presentation, education and entertainment and video projection.

Most sound cards use a digital-to-analog converter (DAC), which converts recorded or generated digital data into an analog format. The output signal is connected to an amplifier, headphones, or external device using standard interconnects, such as an RCA connector. More advanced cards usually include more than one sound chip to support higher data rates and multiple simultaneous functionality, for example digital production of synthesized sounds, usually for real-time generation of music and sound effects using minimal data and CPU time.

Digital sound reproduction is usually done with multichannel DACs, which are capable of simultaneous and digital samples at different pitches and volumes, and application of real-time effects such as filtering or deliberate distortion. Multichannel digital sound playback can also be used for music synthesis, when used with a compliance, and even multiple-channel emulation. Most sound cards have a line in connector for an input signal from a cassette tape or other sound source that has higher voltage levels than a microphone. The sound card digitizes this signal. The DAC transfers the samples to the main memory, from where a recording software may write it to the hard disk for storage, editing, or further processing. Another common external connector is the microphone connector, for signals from a microphone or other low-level input device. Input through a microphone jack can be used, for example, by speech recognition or voice over IP applications.

An important sound card characteristic is polyphony, which refers to its ability to process and output multiple independent voices or sounds simultaneously. These distinct channels are seen as the number of audio outputs, which may correspond to a speaker configuration. Sometimes, the terms voice and channel are used interchangeably to indicate the degree of polyphony, not the output speaker configuration.

For some years, most PC sound cards have had multiple FM synthesis voices, typically 9 or 16, which were usually used for MIDI music. The full capabilities of advanced cards are often not fully used; only one ,mono or two, stereo voice(s) and channel(s) are usually dedicated to playback of digital sound samples, and playing back more than one digital sound sample usually requires a software downmix at a fixed sampling rate. Modern low-cost integrated soundcards such as audio codecs like those meeting the AC’97 standard and even some lower-cost expansion sound cards still work this way. These devices may provide more than two sound output channels, typically 5.1 or 7.1 surround sound, but they usually have no actual hardware polyphony for either sound effects or MIDI reproduction – these tasks are performed entirely in software.

Since digital sound playback has become available and provided better performance than synthesis, modern soundcards with hardware polyphony do not actually use DACs with as many channels as voices; instead, they perform voice mixing and effects processing in hardware, eventually performing digital filtering and conversions to and from the frequency domain for applying certain effects, inside a dedicated DSP. The final playback stage is performed by an external DAC with significantly fewer channels than voices.

Professional soundcards are special soundcards optimized for low-latency multichannel sound recording and playback, including studio-grade fidelity. Their drivers usually follow the Audio Stream Input Output, referred to as ASIO, protocol for use with professional sound engineering and music software, although ASIO drivers are also available for a range of consumer-grade soundcards.

Professional soundcards are usually described as “audio interfaces”, and sometimes have the form of external rack-mountable units using USB, FireWire, or an optical interface, to offer sufficient data rates. The emphasis in these products is, in general, on multiple input and output connectors, direct hardware support for multiple input and output sound channels, as well as higher sampling rates and fidelity as compared to the usual consumer soundcard. In that respect, their role and intended purpose is more similar to a specialized multi-channel data recorder and real-time audio mixer and processor, roles which are possible only to a limited degree with typical consumer soundcards.

In general, consumer grade soundcards impose several restrictions and inconveniences that would be unacceptable to an audio professional. One of a modern soundcard’s purposes is to provide an AD/DA converter analog to digital/digital to analog. However, in professional applications, there is usually a need for enhanced recording, analog to digital conversion capabilities. One of the limitations of consumer soundcards is their comparatively large sampling latency; this is the time it takes for the AD Converter to complete conversion of a sound sample and transfer it to the computer’s main memory.

USB sound cards

USB sound “cards”, sometimes called “audio interfaces”, are usually external boxes that plug into the computer via USB. A USB audio interface may describe a device allowing a computer which has a sound-card, yet lacks a standard audio socket, to be connected to an external device which requires such a socket, via its USB socket.

The USB specification defines a standard interface, the USB audio device class, allowing a single driver to work with the various USB sound devices and interfaces on the market. Even cards meeting the older, slow, USB 1.1 specification are capable of high quality sound with a limited number of channels, or limited sampling frequency or bit depth, but USB 2.0 or later is more capable.

The main function of a sound card is to play audio, usually music, with varying formats; monophonic, stereophonic, various multiple speaker setups and degrees of control. The source may be a CD or DVD, a file, streamed audio, or any external source connected to a sound card input. Audio may be recorded. Sometimes sound card hardware and drivers do not support recording a source that is being played. A card can also be used, in conjunction with software, to generate arbitrary waveforms, acting as an audio-frequency function generator.

A card can be used, again in conjunction with free or commercial software, to analyse input waveforms. For example, a very-low-distortion sinewave oscillator can be used as input to equipment under test; the output is sent to a sound card’s line input and run through Fourier transform software to find the amplitude of each harmonic of the added distortion. Alternatively, a less pure signal source may be used, with circuitry to subtract the input from the output, attenuated and phase-corrected; the result is distortion and noise only, which can be analysed. There are programs which allow a sound card to be used as an audio-frequency oscilloscope.

This picture shows the frequencies being blocked out with the frequencies being allowed through the signal chain. This is the fundamental idea on subtractive synthesis.